and human.

Full-Text Indexing

As its name implies, full-text indexing is where every word on the page is put into a database for searching. Alta Vista, Google, Infoseek, Excite are examples of full-text databases. Full-text indexing will help you find every example of a reference to a specific name or terminology. A general topic search will not be very useful in this database, and one has to dig through a lot of "false drops" (or returned pages that have nothing to do with the search). In this case the websites are indexed my computer software. This software called “spiders” or “robots” automatically seeks out Web sites on the Internet

and retrieves information from those sites (which matches the search criteria) using set instructions written into the software. This information is then automatically entered into a database.

Key word Indexing

In key word indexing only “important” words or phrases are put into the database. Lycos is a good example of key word indexing.

Human Indexing

Yahoo and some of Magellan are two of the few examples of human indexing. In the Key word indexing, all of the work was done by a computer program called a "spider" or a "robot". In human indexing, a person examines the page and determines a very few key phrases that describe it. This allows the user to find a good start of works on a topic - assuming that the topic was picked by the human as something that describes the page. This is how the directory-based web databases are developed.

Methods of Indexing

Posted on 8:56 PM by Srinivas

There are two important methods of indexing used in web database creation - full-text

Labels: Methods Of Indexing

Web Crawler Application Design

The Web Crawler Application is divided into three main modules.

1. Controller

2. Fetcher

3. Parser

Controller Module - This module focuses on the Graphical User Interface (GUI) designed for the web crawler and is responsible for controlling the operations of the crawler. The GUI enables the user to enter the start URL, enter the maximum number of

URL’s to crawl, view the URL’s that are being fetched. It controls the Fetcher and Parser.

Fetcher Module - This module starts by fetching the page according to the start URL specified by the user. The fetcher module also retrieves all the links in a particular page and continues doing that until the maximum number of URL’s is reached.

Parser Module - This module parses the URL’s fetched by the Fetcher module and saves the contents of those pages to the disk.

1. Controller

2. Fetcher

3. Parser

Controller Module - This module focuses on the Graphical User Interface (GUI) designed for the web crawler and is responsible for controlling the operations of the crawler. The GUI enables the user to enter the start URL, enter the maximum number of

URL’s to crawl, view the URL’s that are being fetched. It controls the Fetcher and Parser.

Fetcher Module - This module starts by fetching the page according to the start URL specified by the user. The fetcher module also retrieves all the links in a particular page and continues doing that until the maximum number of URL’s is reached.

Parser Module - This module parses the URL’s fetched by the Fetcher module and saves the contents of those pages to the disk.

Labels: Web Crawler Application Design

Storing & Indexing the web content

Indexing the web content

Similar to an index of a book, a search engine also extracts and builds a catalog of all the words that appear on each web page and the number of times it appears on that page etc. Indexing of web content is a challenging task assuming an average of 1000 words per web page and billions of such pages. Indexes are used for searching by keywords; therefore, it has to be stored in the memory of computers to provide quick access to the search results. Indexing starts with parsing the website content using a parser. Any parser, which is designed to run on the entire Web, must handle a huge array of possible errors. The parser can extract the relevant information from a web page by excluding certain common words (such as a, an, the - also known as stop words), HTML tags, Java Scripting and other bad characters. A good parser can also eliminate commonly occurring

content in the website pages such as navigation links, so that they are not counted as a part of the page'scontent. Once the indexing is completed, the results are stored in memory, in a sorted order. This helps in retrieving the information quickly. Indexes are updated periodically as new content is crawled. Some indexes help create a dictionary (lexicon) of all words that are available for searching. Also a lexicon helps in correcting mistyped words by showi ng the corrected versions in a search result. A part of the success of the search engine lies in how the indexes are built and used. Various algorithms are used to optimize these indexes so that relevant results are found easily without much computing resource usage.

Indexing the web content

Similar to an index of a book, a search engine also extracts and builds a catalog of all the words that appear on each web page and the number of times it appears on that page etc. Indexing of web content is a challenging task assuming an average of 1000 words per web page and billions of such pages. Indexes are used for searching by keywords; therefore, it has to be stored in the memory of computers to provide quick access to the search results. Indexing starts with parsing the website content using a parser. Any parser, which is designed to run on the entire Web, must handle a huge array of possible errors. The parser can extract the relevant information from a web page by excluding certain common words (such as a, an, the - also known as stop words), HTML tags, Java Scripting and other bad characters. A good parser can also eliminate commonly occurring

content in the website pages such as navigation links, so that they are not counted as a part of the page'scontent. Once the indexing is completed, the results are stored in memory, in a sorted order. This helps in retrieving the information quickly. Indexes are updated periodically as new content is crawled. Some indexes help create a dictionary (lexicon) of all words that are available for searching. Also a lexicon helps in correcting mistyped words by showi ng the corrected versions in a search result. A part of the success of the search engine lies in how the indexes are built and used. Various algorithms are used to optimize these indexes so that relevant results are found easily without much computing resource usage.

Storing the Web Content

In addition to indexing the web content, the individual pages are also stored in the search engine'database. Due to cheaper disk storage, the storage capacity of search engines is very huge, and often runs into terabytes of data. However, retrieving this data quickly and efficiently requires special distributed and scalable data storage functionality. The amount of data, that a search engine can store, is limited by the amount of data it can retrieve for search results. Google can index and store about 3 billion web documents. This capacity is far more than any other search engine during this time. "Spiders" take a Web page'scontent and create key search words that enable online users to find pages they're looking for.

In addition to indexing the web content, the individual pages are also stored in the search engine'sdatabase. Due to cheaper disk storage, the storage capacity of search engines is very huge, and often runs into terabytes of data. However, retrieving this data quickly and efficiently requires special distributed and scalable data storage functionality. The amount of data, that a search engine can store, is limited by the amount of data it can retrieve for search results. Google can index and store about 3 billion web documents. This capacity is far more than any other search engine during this time. "Spiders" take a Web page'scontent and create key search words that enable online users to find pages they're looking for.

Labels: Storing Indexing the web content

Robot Protocol

Web sites also often have restricted areas that crawlers should not crawl. To address these concerns, many Web sites adopted the Robot protocol, which establishes guidelines that crawlers should follow. Over time, the protocol has become the unwritten law of the Internet for Web crawlers. The Robot protocol specifies that Web sites wishing to restrict certain areas or pages from crawling have a file called robots.txt placed at the root of the Web site. The ethical crawlers will then skip the disallowed areas. Following is an example robots.txt file and an explanation of its format:

# robots.txt for http://somehost.com/

User-agent: *

Disallow: /cgi-bin/

Disallow: /registration # Disallow robots on registration page

Disallow: /login

The first line of the sample file has a comment on it, as denoted by the use of a hash (#)

character. Crawlers reading robots.txt files should ignore any comments.

The third line of the sample file specifies the User-agent to which the Disallow rules following it apply. User-agent is a term used for the programs that access a Web site. Each browser has a unique User-agent value that it sends along with each request to a Web server. However, typically Web sites want to disallow all robots (or User-agents) access to certain areas, so they use a value of asterisk (*) for the User-agent. This specifies that all User-agents be disallowed for the rules that follow it. The lines following the User-agent lines are called disallow statements. The disallow statements define the Web site paths that crawlers are not allowed to access. For example, the first disallow statement in the sample file tells crawlers not to crawl any links that begin with “/cgi-bin/”. Thus, the following URLs are both off limits to crawlers according to that line.

http://somehost.com/cgi-bin/

http://somehost.com/cgi-bin/register (Searching Indexing Robots and Robots.txt)

Competing search engines Google, Yahoo!, Microsoft Live, and Ask have announced today their support for 'autodiscovery' of sitemaps. The newly announced autodiscovery method allows you to specify in your robot.txt file where your sitemap is located.

# robots.txt for http://somehost.com/

User-agent: *

Disallow: /cgi-bin/

Disallow: /registration # Disallow robots on registration page

Disallow: /login

The first line of the sample file has a comment on it, as denoted by the use of a hash (#)

character. Crawlers reading robots.txt files should ignore any comments.

The third line of the sample file specifies the User-agent to which the Disallow rules following it apply. User-agent is a term used for the programs that access a Web site. Each browser has a unique User-agent value that it sends along with each request to a Web server. However, typically Web sites want to disallow all robots (or User-agents) access to certain areas, so they use a value of asterisk (*) for the User-agent. This specifies that all User-agents be disallowed for the rules that follow it. The lines following the User-agent lines are called disallow statements. The disallow statements define the Web site paths that crawlers are not allowed to access. For example, the first disallow statement in the sample file tells crawlers not to crawl any links that begin with “/cgi-bin/”. Thus, the following URLs are both off limits to crawlers according to that line.

http://somehost.com/cgi-bin/

http://somehost.com/cgi-bin/register (Searching Indexing Robots and Robots.txt)

Competing search engines Google, Yahoo!, Microsoft Live, and Ask have announced today their support for 'autodiscovery' of sitemaps. The newly announced autodiscovery method allows you to specify in your robot.txt file where your sitemap is located.

Labels: Robot Protocol, Robot.txt file

Crawling Techniques

Crawling Techniques

Focused Crawling

A general purpose Web crawler gathers as many pages as it can from a particular set of URL’s. Where as a focused crawler is designed to only gather documents on a specific topic, thus reducing the amount of network traffic and downloads. The goal of the focused crawler is to selectively seek out pages that are relevant to a pre-defined set of topics. The topics are specified not using keywords, but using exemplary documents. Rather than collecting and indexing all accessible web documents to be able to answer all possible ad-hoc queries, a focused crawler analyzes its crawl boundary to find the links that are likely to be most relevant for the crawl, and avoids irrelevant regions of the web. This leads to significant savings in hardware and network resources, and helps keep the crawl more up-to-date. The focused crawler has three main components: a classifier, which makes relevance judgments on pages crawled to decide on link expansion, a distiller which determines a measure of centrality of crawled pages to determine visit priorities, and a crawler with dynamically reconfigurable priority controls which is governed by the classifier and distiller. The most crucial evaluation of focused crawling is to measure the harvest ratio, which is rate at which relevant pages are acquired and irrelevant pages are effectively filtered off from the crawl. This harvest ratio must be high, otherwise the focused crawler would spend a lot of time merely eliminating irrelevant pages, and it may be better to use an ordinary crawler instead.

Distributed Crawling

Indexing the web is a challenge due to its growing and dynamic nature. As the size of the Web is growing it has become imperative to parallelize the crawling process in order to finish downloading the pages in a reasonable amount of time. A single crawling process even if multithreading is used will be insufficient for large – scale engines that need to fetch large amounts of data rapidly. When a single centralized crawler is used all the fetched data passes through a single physical link. Distributing the crawling activity via multiple processes can help build a scalable, easily configurable system, which is fault tolerant system. Splitting the load decreases hardware requirements and at the same time increases the overall download speed and reliability. Each task is performed in a fully distributed fashion, that is, no central coordinator exists.

Focused Crawling

A general purpose Web crawler gathers as many pages as it can from a particular set of URL’s. Where as a focused crawler is designed to only gather documents on a specific topic, thus reducing the amount of network traffic and downloads. The goal of the focused crawler is to selectively seek out pages that are relevant to a pre-defined set of topics. The topics are specified not using keywords, but using exemplary documents. Rather than collecting and indexing all accessible web documents to be able to answer all possible ad-hoc queries, a focused crawler analyzes its crawl boundary to find the links that are likely to be most relevant for the crawl, and avoids irrelevant regions of the web. This leads to significant savings in hardware and network resources, and helps keep the crawl more up-to-date. The focused crawler has three main components: a classifier, which makes relevance judgments on pages crawled to decide on link expansion, a distiller which determines a measure of centrality of crawled pages to determine visit priorities, and a crawler with dynamically reconfigurable priority controls which is governed by the classifier and distiller. The most crucial evaluation of focused crawling is to measure the harvest ratio, which is rate at which relevant pages are acquired and irrelevant pages are effectively filtered off from the crawl. This harvest ratio must be high, otherwise the focused crawler would spend a lot of time merely eliminating irrelevant pages, and it may be better to use an ordinary crawler instead.

Distributed Crawling

Indexing the web is a challenge due to its growing and dynamic nature. As the size of the Web is growing it has become imperative to parallelize the crawling process in order to finish downloading the pages in a reasonable amount of time. A single crawling process even if multithreading is used will be insufficient for large – scale engines that need to fetch large amounts of data rapidly. When a single centralized crawler is used all the fetched data passes through a single physical link. Distributing the crawling activity via multiple processes can help build a scalable, easily configurable system, which is fault tolerant system. Splitting the load decreases hardware requirements and at the same time increases the overall download speed and reliability. Each task is performed in a fully distributed fashion, that is, no central coordinator exists.

Crawler Based Search Engines

Crawler based search engines Their listings automatically. Computer programs ‘spiders’ build them not by human selection. They are not organized by subject categories; a computer algorithm ranks all pages. Such kinds of search engines are huge and often retrieve a lot of information -- for complex searches it allows to search within the results of a previous search and enables you to refine search results. These types of search engines contain full text of the web pages they link to. So one can find pages by matching words in the pages one wants.

Labels: Crawler based search engines

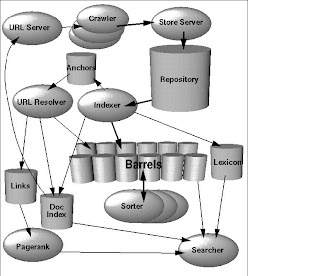

HOW SEARCH ENGINES WORK

Search engines index tens to hundreds of millions of web pages involving a comparable number of distinct terms. They answer tens of millions of queries every day. Despite the importance of large-scale search engines on the web, very little academic research has been conducted on them. Furthermore, due to rapid advance in technology and web proliferation, creating a web search engine today is very different from three years ago. There are differences in the ways various search engines work, but they all perform three basic tasks:

1. They search the Internet or select pieces of the Internet based on important Words.

2. They keep an index of the words they find, and where they find them.

3. They allow users to look for words or combinations of words found in that index.

A search engine finds information for its database by accepting listings sent in by authors who want exposure, or by getting the information from their "web crawlers," "spiders," or "robots," programs that roam the Internet storing links to and information about each page they visit.

A web crawler is a program that downloads and stores Web pages, often for a Web search engine. Roughly, a crawler starts off by placing an initial set of URLs,

S0, in a queue, where all URLs to be retrieved are kept and prioritized. From this queue, the crawler gets a URL (in some order), downloads the page, extracts any URLs in the downloaded page, and puts the new URLs in the queue. This process is repeated until the crawler decides to stop. Collected pages are later used for other applications, such as a Web search engine or a Web cache.

The most important measure for a search engine is the search performance, quality of the results and ability to crawl, and index the web efficiently. The primary goal is to provide high quality search results over a rapidly growing World Wide Web. Some of the efficient and recommended search engines are Google, Yahoo and Teoma, which share

some common features and are standardized to some extent.

1. They search the Internet or select pieces of the Internet based on important Words.

2. They keep an index of the words they find, and where they find them.

3. They allow users to look for words or combinations of words found in that index.

A search engine finds information for its database by accepting listings sent in by authors who want exposure, or by getting the information from their "web crawlers," "spiders," or "robots," programs that roam the Internet storing links to and information about each page they visit.

A web crawler is a program that downloads and stores Web pages, often for a Web search engine. Roughly, a crawler starts off by placing an initial set of URLs,

S0, in a queue, where all URLs to be retrieved are kept and prioritized. From this queue, the crawler gets a URL (in some order), downloads the page, extracts any URLs in the downloaded page, and puts the new URLs in the queue. This process is repeated until the crawler decides to stop. Collected pages are later used for other applications, such as a Web search engine or a Web cache.

The most important measure for a search engine is the search performance, quality of the results and ability to crawl, and index the web efficiently. The primary goal is to provide high quality search results over a rapidly growing World Wide Web. Some of the efficient and recommended search engines are Google, Yahoo and Teoma, which share

some common features and are standardized to some extent.

Subscribe to:

Post Comments (Atom)

No Response to "Methods of Indexing"

Post a Comment